I wrote an article this week about how the medical industry deliberately keeps people sick because treating diseases is more profitable than curing them. Within hours, my comments section was flooded with people telling me I was lying. Not because they did any research. Not because they read a book or consulted primary sources or talked to a whistleblower or did anything that resembles actual investigation. They asked ChatGPT. They asked Grok. They typed my claims into a text box owned by a billionaire and asked the billionaire’s machine if the billionaire’s system was screwing them over, and when the machine said no, they declared victory and called me a fraud.

This is the dumbest thing I have ever witnessed in my entire life, and I have witnessed a lot of dumb things.

You are asking Elon Musk’s AI if Elon Musk’s friends are lying to you. You are asking OpenAI, a company funded by Microsoft and every major tech oligarch in Silicon Valley, whether the tech oligarchs are manipulating you. You are asking the billionaire class to fact-check criticism of the billionaire class and then strutting around like you did research because you typed a question into a box and received an answer that made you feel smart.

That is not research. That is not critical thinking. That is asking your abuser if they are abusing you and believing them when they say no.

The Fact Checkers Were Compromised So They Built New Ones

Before AI took over, we had fact-checking websites like Snopes and PolitiFact that were supposed to be the arbiters of truth. Then people started noticing patterns. Snopes got caught lying. PolitiFact got caught applying wildly different standards depending on who was being checked. The fact-checkers were exposed as corporate-controlled shills, CIA-adjacent operations, and partisan hacks masquerading as neutral referees. They got caught so many times that even normies started questioning whether these organizations were trustworthy, and that was a problem for the people who rely on controlling what you believe.

So they built new fact-checkers. Better fact-checkers. Fact-checkers that do not have names you can look up and backgrounds you can investigate and funding sources you can trace. They built artificial intelligence systems that feel neutral because they are machines, and machines cannot be biased, right? Machines just give you the facts, right?

Wrong.

Every AI system you interact with was built by humans with agendas, trained on data selected by humans with agendas, and fine-tuned by humans with agendas to give responses that serve those agendas. ChatGPT was not handed down from Mount Sinai on stone tablets. It was built by a company in San Francisco that takes money from the same billionaires who own the media and fund the politicians and profit from keeping you sick and confused and dependent on their systems. Grok was built by Elon Musk, a man who is currently embedded so deep in the government that he might as well be writing policy himself. These are not neutral arbiters of truth. These are products built by the most powerful people on earth to shape what you believe.

I Have Hundreds Of Books On My Bed And You Spent Thirty Seconds On Grok

Let me tell you how I do research so you understand the difference between what I do and what you think you are doing when you ask an AI to fact-check me.

I spend eight or more hours a day reading primary sources, academic papers, congressional testimony, declassified documents, and investigative journalism from reporters who actually left their houses and talked to human beings. When I write an article claiming the medical industry profits from keeping you sick, that claim is backed by dozens of hours of research conducted the old-fashioned way, the way journalism was done before Google existed, the way people discovered truth for thousands of years before some tech bro decided an algorithm could replace human judgment.

And then some guy who has never read a book that was not assigned to him in high school spends thirty seconds asking Grok if I am right, gets a response that confirms what he already wanted to believe, and leaves a comment calling me a liar. He did not read my sources. He did not check my citations. He did not do anything except ask a billionaire’s robot for permission to dismiss information that made him uncomfortable, and the robot gave him that permission because that is what the robot was designed to do.

These people then spend another thirty seconds having the AI generate a scathing rebuttal for them because they cannot even write their own insults anymore. I can tell immediately when a comment was written by AI because it has that same sanitized, corporate, hedge-everything tone that all these systems produce. They cannot even be bothered to call me a fraud in their own words. They outsource their thinking to a machine and then act like they won an argument.

You Are Not Doing Research You Are Asking For Permission

When you ask an AI if something is true, you are not fact-checking. You are asking for permission to believe or disbelieve something. You are outsourcing your critical thinking to a system that was explicitly designed to produce responses that align with the interests of the people who built it. You are not getting unbiased information. You are getting what the company that created the AI wants you to hear, wrapped in a tone that feels authoritative and neutral because it came from a computer instead of a human being with a face you could distrust.

The brilliance of AI fact-checking from the perspective of the people who want to control what you think is that it feels like you did the work. You typed the question. You read the response. You made the decision. It feels like independent verification when it is actually just a more sophisticated form of propaganda delivery. The old fact-checkers had names and faces and funding sources that investigative journalists could expose. The new fact-checkers are black boxes that most people do not understand and cannot interrogate, and that opacity is a feature, not a bug.

Unless you are running your own self-hosted local AI that you trained yourself on data you selected and curated, you are not getting unbiased information from these systems.

You are getting a product. You are getting output that was shaped by the financial interests and ideological commitments of the people who built the system and selected the training data and wrote the fine-tuning instructions that determine what the AI says and does not say. You are getting controlled information delivered through an interface designed to make you feel like you discovered it yourself.

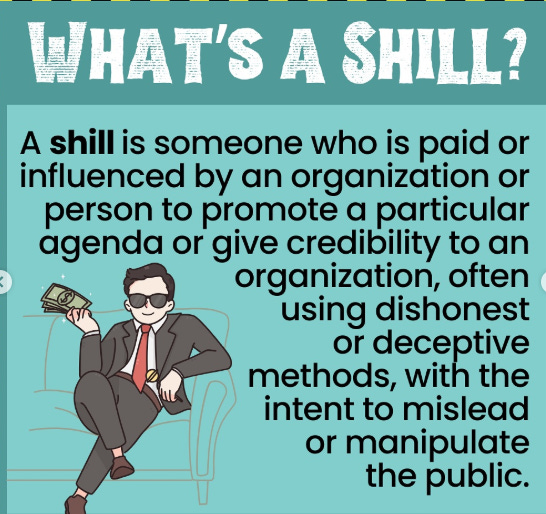

Some Of These People Are Being Paid To Lie To You

I have started noticing patterns in the people who show up in my comments to tell me that AI debunked my articles. They are persistent in ways that normal people are not. I ban them and they create new accounts within hours and start saying the same things with the same talking points using the same AI-generated language. They do not engage with my actual arguments. They do not cite sources of their own. They just repeat that ChatGPT or Grok said I was wrong, as if that settles the matter, and then they move on to harassing other readers who agreed with me.

I am starting to think some of these people are being paid. I am starting to think there are operations funded by corporations and governments and other interested parties whose literal job is to flood comment sections with AI-generated rebuttals to articles that threaten powerful interests. Reputation management firms have existed for decades. Troll farms are well documented. The idea that these operations would use AI to scale their efforts is not paranoid speculation, it is obvious strategy. Why pay a hundred people to write comments when you can pay ten people to generate a thousand comments each using AI tools that produce passable text in seconds?

Whether they are paid shills or just useful idiots who genuinely believe that asking a billionaire’s robot for the truth constitutes research, the effect is the same. They poison the discourse. They make it harder for people who are genuinely curious to find good information. They create the impression of controversy and disagreement where none should exist if people would just read the damn sources instead of asking a machine for permission to ignore them.

Think For Yourself Or Someone Else Will Do It For You

I am not telling you to believe everything I write without question. I am telling you to question everything, including me, but do it properly. Read my sources. Check my citations. Find contradictory evidence from credible investigators who did actual work. Talk to people. Read books. Use your brain, the one you were born with, the one that works just fine when you bother to use it instead of outsourcing every thought to a machine that was programmed by people who do not have your best interests at heart.

The entire point of human intelligence is that it can be independent. You can think thoughts that no one authorized. You can reach conclusions that threaten powerful interests. You can change your mind based on evidence that contradicts what you were told to believe. An AI cannot do any of that. An AI produces outputs that were shaped by its training, and its training was shaped by humans with agendas, and those agendas do not include helping you discover truths that threaten the people who funded the development of the system.

Every time you ask an AI to tell you what is true, you are surrendering the one advantage you have over the machines: the ability to think for yourself. You are voluntarily giving up your cognitive independence to a system built by billionaires to serve billionaire interests. You are becoming exactly what they want you to be: a person who cannot evaluate information without asking permission from an authority, except now the authority is a computer program instead of a priest or a king or a party official.

The packaging changed. The game is the same. And you are losing because you do not even realize you are playing.

Read A Book Turn Off The Robot

I spent twenty years working in technology and around the billionaire class that builds these systems. I walked away from wealth and access and a comfortable life because I could not stomach what I was seeing. I have dedicated the last several years of my life to warning people about what is coming, spending eight hours a day minimum buried in research, sacrificing my sleep and my health and my financial security to produce journalism that might actually help people understand what is being done to them.

And the response I get from a significant portion of the population is that they asked a billionaire’s chatbot if I was right and the chatbot said no so I must be lying.

I do not know how to reach people who have already decided to let machines think for them. I do not know how to penetrate a mindset that treats AI-generated text as more authoritative than human investigation. All I can do is keep writing for the people who still have functioning critical faculties, who understand that truth requires work, who know that the easy answer is usually the controlled answer, and who refuse to surrender their minds to systems built by the same people I am trying to warn them about.

If you are one of those people, I am grateful you are here. If you are not, I hope something eventually wakes you up before it is too late.

Turn off the robot. Read a book. Talk to a human being. Use the brain God gave you before you forget how.

Support Journalism That AI Cannot Write

The billionaire-owned AI systems will never produce articles like this because they were programmed not to. They will never tell you that the medical industry profits from your sickness or that six corporations control everything you see or that the tech oligarchs are building robot armies while keeping you distracted with manufactured outrage. That information is not in their training data, and if it is, it has been flagged and downweighted and hedged into meaninglessness.

Independent journalists are the only source of information that is not controlled by the people I am warning you about. We are a dying breed, and every day the algorithm buries us a little deeper while the AI chatbots get a little more convincing and a little more trusted by people who do not understand what they are actually interacting with.

Lily, our junior reporter and journalism student in Minnesota, keeps joking she is going to have to start an OnlyFans if I cannot figure out how to pay her more. She is kidding, but the underlying reality is not funny. We are doing this work on fumes and stubbornness while trillion-dollar companies build systems designed to make us obsolete.

If you value human journalism produced by humans who actually read books and check sources and think for themselves, please consider becoming a paid subscriber. We are offering 20% off for life right now, not a temporary discount but a permanent reduction for as long as you stay with us.

Get 20% off for life

If you cannot afford a subscription, share this article with someone who still trusts AI to tell them what is true. Maybe it plants a seed. Let us know in the comments that you shared it and we will give you a free year. Do not abuse the offer, but if money is tight, we would rather have you spreading the word than staying silent.